Overview

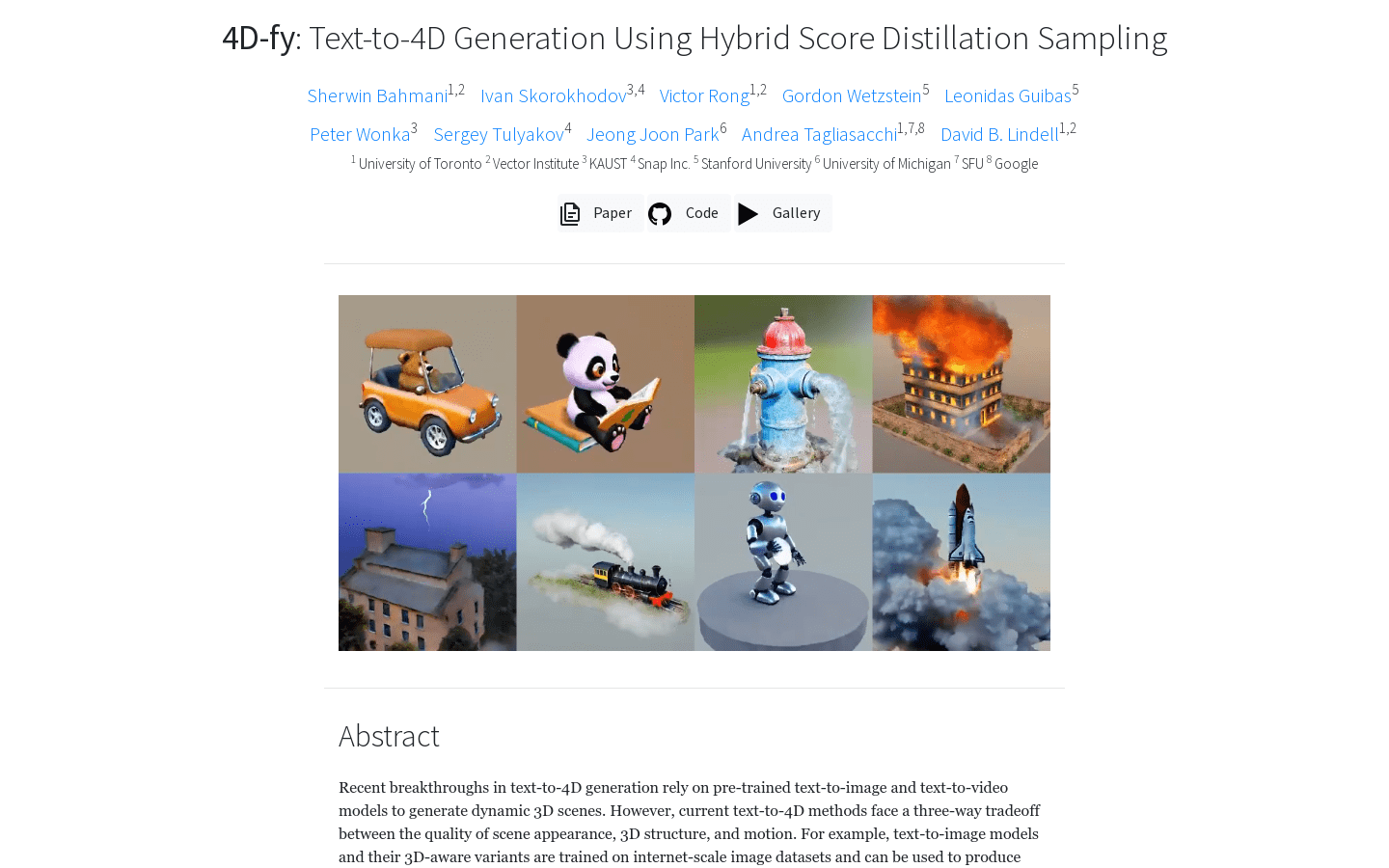

The 4D-fy framework represents an innovative approach to text-to-4D generation, leveraging mixed-fraction distillation sampling and neural radiance field parameterization. This cutting-edge method combines supervised signals from multiple pre-trained diffusion models to produce high-quality 4D scene generations directly from text inputs.

At its core, 4D-fy employs advanced neural representations to model 4D radiance fields, incorporating both static and dynamic multi-scale hash table features. These representations are then rendered into images and videos using a sophisticated volume rendering technique.

The process begins with an optimization phase where the neural representation is fine-tuned using gradients from a state-of-the-art text-to-image model specialized for 3D perception (3D-T2I). This is followed by an appearance refinement step, integrating insights from a general-purpose text-to-image model (T2I). Finally, the system enhances scene dynamics by incorporating gradients from a text-to-video model (T2V), resulting in 4D scenes that are not only visually stunning but also rich in spatial structure and fluid motion.

Target Audience

The primary users of 4D-fy include professionals and organizations seeking to generate high-fidelity 4D environments from textual descriptions. This technology is particularly valuable for:

– Film and Visual Effects: Creating complex scenes such as fire, water, or other dynamic phenomena.

– Virtual Reality Development: Building immersive and interactive digital worlds.

– Advertising and Media: Designing engaging product showcases with motion-rich animations.

Use Cases

The applications of 4D-fy span various industries:

1. Film Visual Effects: A leading VFX company utilized 4D-fy to generate a highly realistic fire scene, achieving unprecedented levels of detail and realism.

2. Virtual Reality Games: Game developers leveraged the framework to create dynamic and interactive virtual environments that adapt in real-time to user actions.

3. Product Showcases: Marketing teams used 4D-fy to design captivating product demonstrations with motion effects that grab attention and enhance user engagement.

Key Features

1.

Mixed-Fraction Distillation Sampling:

The foundation of 4D-fy lies in its unique mixed-fraction distillation sampling technique, which intelligently combines gradients from multiple pre-trained diffusion models to optimize scene generation.

2.

Neural Radiance Field Parameterization:

The framework parameterizes 4D radiance fields using advanced neural networks, incorporating both static and dynamic multi-scale hash table features for realistic scene modeling.

3.

Multi-Modal Rendering Capabilities:

By leveraging supervised signals from various pre-trained models, 4D-fy can generate both still images and dynamic video outputs, ensuring high fidelity across different media types.

This innovative approach enables the creation of 4D scenes that are not only visually stunning but also highly interactive and spatially coherent, making it a powerful tool for modern visual computing applications.