Animate Anyone

Animate Anyone:'Synthesis of consistent, controllable character animations from images.'

Tags:AI video generationAI image generation AI video generation Character Animation Fashion Video Human Dance Image to Video Synthesis Open Source Standard PicksIntroduction to Animate Anyone

The goal of Animate Anyone is to create character videos from static images using signal-driven animation techniques. By harnessing the capabilities of diffusion models, we have developed a cutting-edge framework specifically designed for character animation tasks. Our approach focuses on maintaining the consistency of intricate visual features present in reference images while ensuring smooth and controlled character movements.

Key Components

To achieve these goals, Animate Anyone incorporates several innovative components:

ReferenceNet Module

The ReferenceNet module is designed to merge detailed image features using spatial attention mechanisms. This allows the system to preserve complex appearance characteristics from reference images while generating animations. The integration of spatial attention ensures that important visual elements are consistently maintained throughout the animation process.

Pose Guidance System

Our efficient pose guidance module provides precise control over character movements. By analyzing and predicting pose transitions, this system ensures smooth and natural motion. It serves as a crucial component in directing how characters move and interact within their animated environments.

<h4(Temporal Modeling Approach)

The temporal modeling approach employed by Animate Anyone ensures seamless transitions between video frames. This technique minimizes abrupt changes and discontinuities, resulting in fluid and realistic animations. It plays a vital role in maintaining the visual coherence of the generated videos.

Extended Capabilities

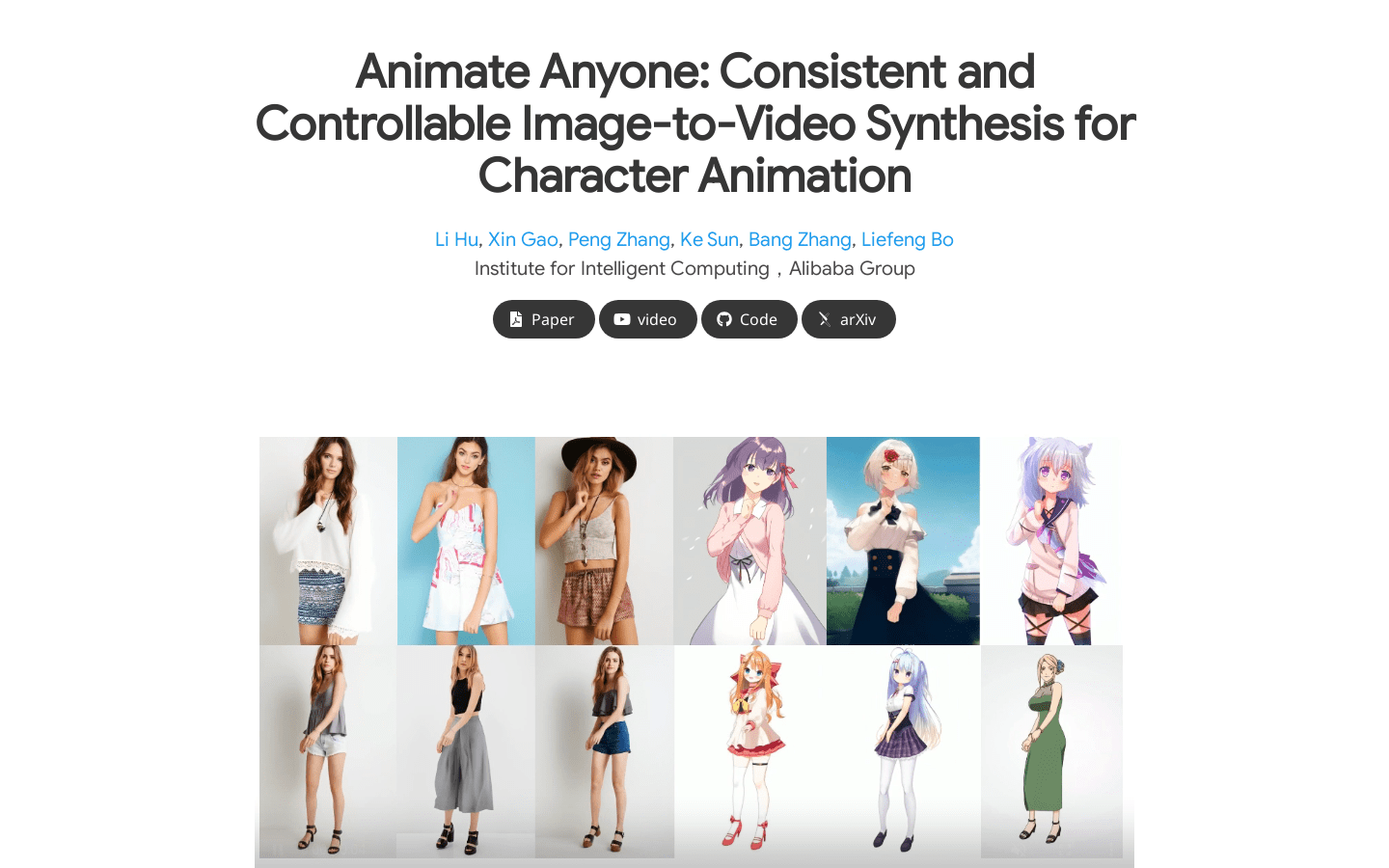

By expanding our training dataset, Animate Anyone has achieved remarkable results in animating diverse characters. This includes但不限于:

- Transforming fashion photos into lifelike animated videos

- Synthesizing human dance sequences from the TikTok dataset

- Animating anime and cartoon characters with exceptional detail

Evaluation and Results

Our method has been rigorously tested on industry benchmarks for fashion video synthesis and human dance animation. The results demonstrate that Animate Anyone outperforms existing image-to-video approaches, achieving state-of-the-art performance in these domains.

Key Features of Animate Anyone:

- Generates high-quality character videos from static images using advanced signal processing

- Leverages cutting-edge diffusion models for realistic animation generation

- Merges detailed image features using spatial attention via the ReferenceNet module

- Employs an efficient pose guidance system to control character movements

- Uses effective temporal modeling to ensure smooth frame transitions

- Supports animation of any character type through extended training data

- Achieves superior results on leading benchmarks for fashion video and dance synthesis

With its innovative approach and robust performance, Animate Anyone represents a significant advancement in the field of character animation. Its ability to transform static images into dynamic, lifelike videos makes it an invaluable tool for creators, designers, and researchers alike.