Generative Rendering: 2D Mesh

Generative Rendering: 2D Mesh:AI-Controlled Video Generation

Tags:AI video generationAI 3D Tools AI video generation Control Model Diffusion model Dynamic Mesh Open Source Standard Picks Video generationIntroduction to Our Novel Video Generation Method

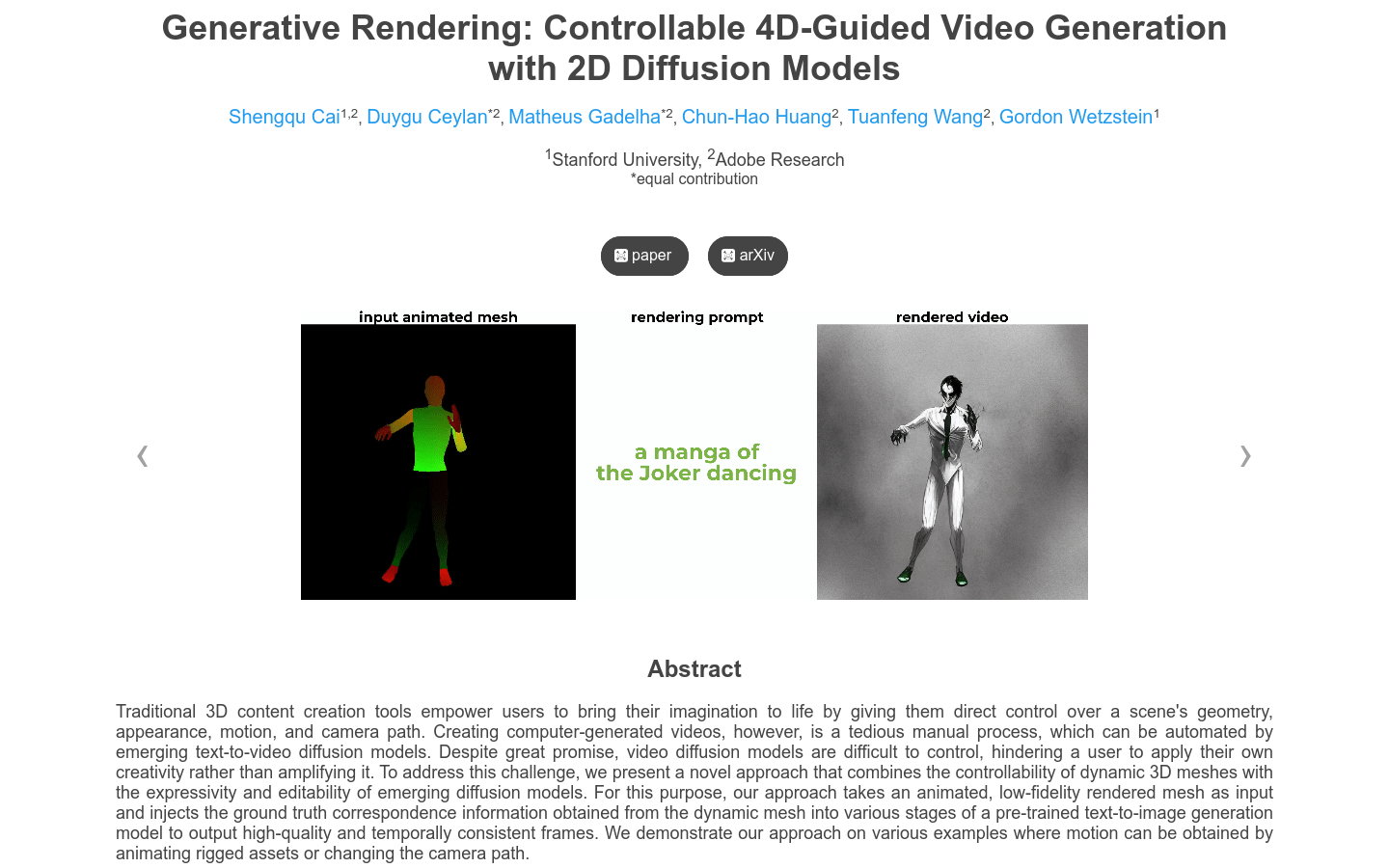

The traditional 3D content creation process offers users direct control over scene geometry, appearance, actions, and camera movement, making it a powerful tool for bringing imagination to life. However, the manual effort required to generate computer-generated videos remains a significant challenge in the industry. While text-to-video diffusion models have shown promise in automating this process, they currently lack sufficient user control, limiting their creative potential. To overcome these limitations, we introduce an innovative approach that merges the precise controllability of dynamic 3D meshes with the expressive capabilities of modern diffusion models.

How Our Method Works

Our solution takes animated low-fidelity rendering meshes as input and integrates ground truth correspondences derived from these dynamic meshes into various stages of a pre-trained text-to-image generation model. This integration ensures the output is not only high-quality but also temporally consistent across frames. By leveraging the strengths of both 3D meshes and diffusion models, we achieve a balance between creative control and automated video generation.

Applications and Use Cases

Our method finds its application in several key areas within the content creation industry:

1. Animation Production

Create realistic animation scenes using our generative rendering model, enabling more efficient production pipelines for animators.

2. Special Effects Production

Generate high-quality special effects video clips that meet the demanding requirements of modern filmmaking.

3. Film and Television Post-Production

Enhance post-production workflows by applying our model to create intricate visual effects for movies and television programs.

Key Features of Our Approach

- Input Acceptance: The method accepts UV and depth maps from animated 3D scenes, providing a solid foundation for video generation.

- Depth-Conditional ControlNet Utilization: By incorporating the depth-conditional ControlNet, we ensure consistent frame generation while maintaining alignment through UV correspondences.

- Noise Initialization in UV Space: For each object, initial noise is introduced in the UV space and rendered into individual images, enhancing flexibility and control.

- Attention Expansion During Diffusion: Each diffusion step employs expanded attention to extract both pre-processed and post-processed attention features from a set of key frames.

- UV Space Projection and Unification: Post-processed attention features are projected back into the UV space, ensuring seamless integration across frames.

- Frame Generation Process: The final video frames are generated by combining expanded attention outputs with a weighted blend of pre-processed and post-processed features from key frames, along with their corresponding UV data.

This approach not only maintains the controllability of traditional 3D methods but also harnesses the creative potential of advanced diffusion models, marking a significant advancement in video generation technology. Our method provides a versatile and powerful tool for professionals in animation, special effects, and post-production, enabling them to realize their creative vision with greater efficiency and precision.