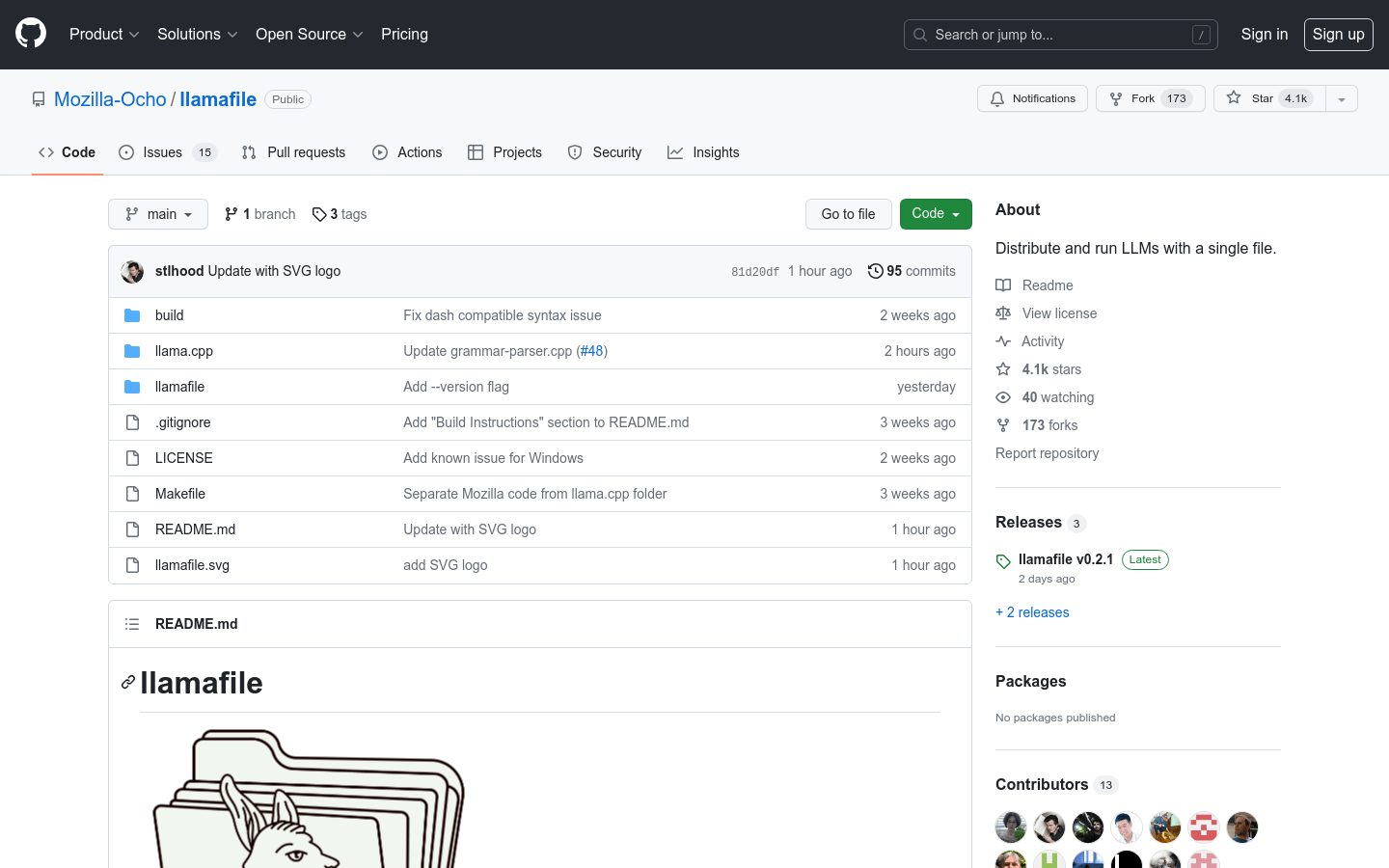

Llamafile

Llamafile:Pack LLM into executable

Tags:AI modelAI model AI tools Cross-Platform Executable File LLM Open Source Standard PicksOverview

LlamaFile is a powerful tool designed to package Large Language Models (LLMs) and their associated weights into a single, self-contained executable file. By integrating llama.cpp with the Cosmopolitan Libc library, LlamaFile enables complex LLM models to be compressed into an optimized format known as a “llamafile.” This innovation allows users to run these models locally on most computers without requiring any installation or configuration. The primary goal of LlamaFile is to enhance accessibility, making open-source LLM models more approachable for both developers and end-users.

Target Users

LlamaFile caters to a diverse audience:

- Quick Trial of LLM Models: Users looking to experiment with LLMs without extensive setup can benefit from its ease-of-use.

- Publish and Share Pre-Trained LLM Models: Researchers and developers can leverage this tool to package and distribute their custom-trained models seamlessly.

Use Cases

LlamaFile offers versatile use cases:

- Users can download pre-built llamafiles to engage in local conversations or pose questions to LLMs.

- Researchers can utilize the tool to train custom models and share them as optimized llamafiles with collaborators.

- Developers can integrate LLM capabilities into their products with ease by utilizing the ready-to-use llamafiles.

Features

LlamaFile provides a comprehensive feature set:

- Cross-Platform Compatibility: Supports various CPU architectures (x86, ARM) and multiple operating systems including Windows, Linux, macOS, and others.

- Built-In Model Weights: The tool includes pre-integrated model weights, eliminating the need for additional downloads or installations.

- User-Friendly Interfaces: Offers both command-line interface (CLI) and HTTP server interface options to cater to different user preferences.

- Accelerated Performance: Supports GPU acceleration through CUDA and Metal, enhancing performance for demanding tasks.

This tool represents a significant advancement in LLM deployment, offering flexibility, ease of use, and broad accessibility across diverse computing environments. Its ability to streamline model distribution and execution makes it an invaluable resource for anyone working with large language models.