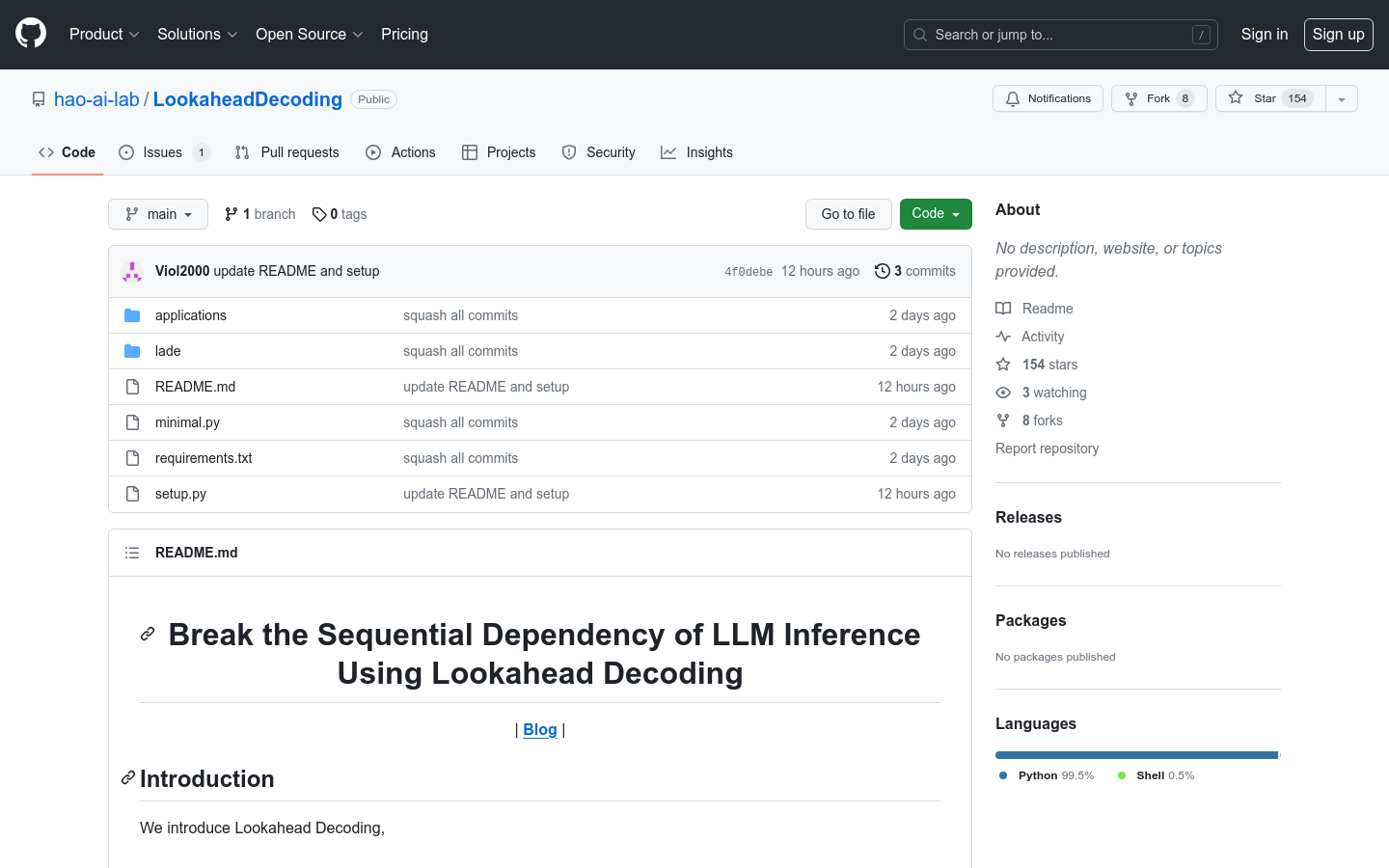

Lookahead Decoding

Lookahead Decoding:Decoupling Sequential Dependencies in LLMs

Tags:AI modelAI development assistant AI model Efficiency improvement Inference method LLM Open Source Sequential dependency Standard PicksIntroduction to Lookahead Decoding

Lookahead Decoding is an innovative inference method designed to revolutionize the way large language models (LLMs) process information. By eliminating the sequential dependency that traditionally limits LLM performance, this groundbreaking technique significantly enhances computational efficiency and speed.

Key Benefits:

Break the Sequential Dependency

The traditional approach of processing tokens sequentially can severely bottleneck inference speed. Lookahead Decoding addresses this limitation by enabling parallel processing, allowing models to generate outputs more efficiently without waiting for each prior token to be processed.

Enhance Inference Efficiency

By breaking the chain-like dependency that defines standard decoding methods, Lookahead Decoding frees up computational resources. This allows for faster and more efficient inference, making it an ideal solution for applications requiring real-time responses or large-scale processing.

Getting Started:

Implementation Guide

Integrating Lookahead Decoding into your existing workflows is straightforward. Simply import the Lookahead Decoding library and leverage its powerful capabilities to optimize your code performance. This seamless integration ensures minimal disruption while maximizing efficiency gains.

Supported Models

Currently, Lookahead Decoding is optimized to work with two leading models:

- LLaMA (Large Language Model Meta AI)

- Greedy Search Algorithm

Use Cases and Applications:

Practical Scenarios for Enhancing Performance

1. Improve Inference Speed in Your Projects: Use Lookahead Decoding to optimize your existing LLM-based applications, ensuring faster response times without compromising accuracy.

2. Demostration with Minimal.py: Run the provided minimal.py script to observe first-hand the significant speed improvements Lookahead Decoding can deliver in real-world scenarios.

3. Advanced Chatbot Interactions: Experience more fluid and efficient chatbot conversations by implementing Lookahead Decoding, making interactions feel more natural and responsive.

Features Overview:

Core Capabilities of Lookahead Decoding

- BREAKS SEQUENTIAL DEPENDENCY IN LLM INFERENCE: Enables parallel processing for faster output generation.

- HUGELY IMPROVES INFERENCE EFFICIENCY: Reduces computational bottlenecks, enhancing overall performance.

- SUPPORTED MODELS: Currently compatible with LLaMA and Greedy Search models.

Lookahead Decoding represents a major leap forward in LLM inference technology. By adopting this method, developers can significantly enhance the efficiency of their applications while maintaining high performance standards.