Overview

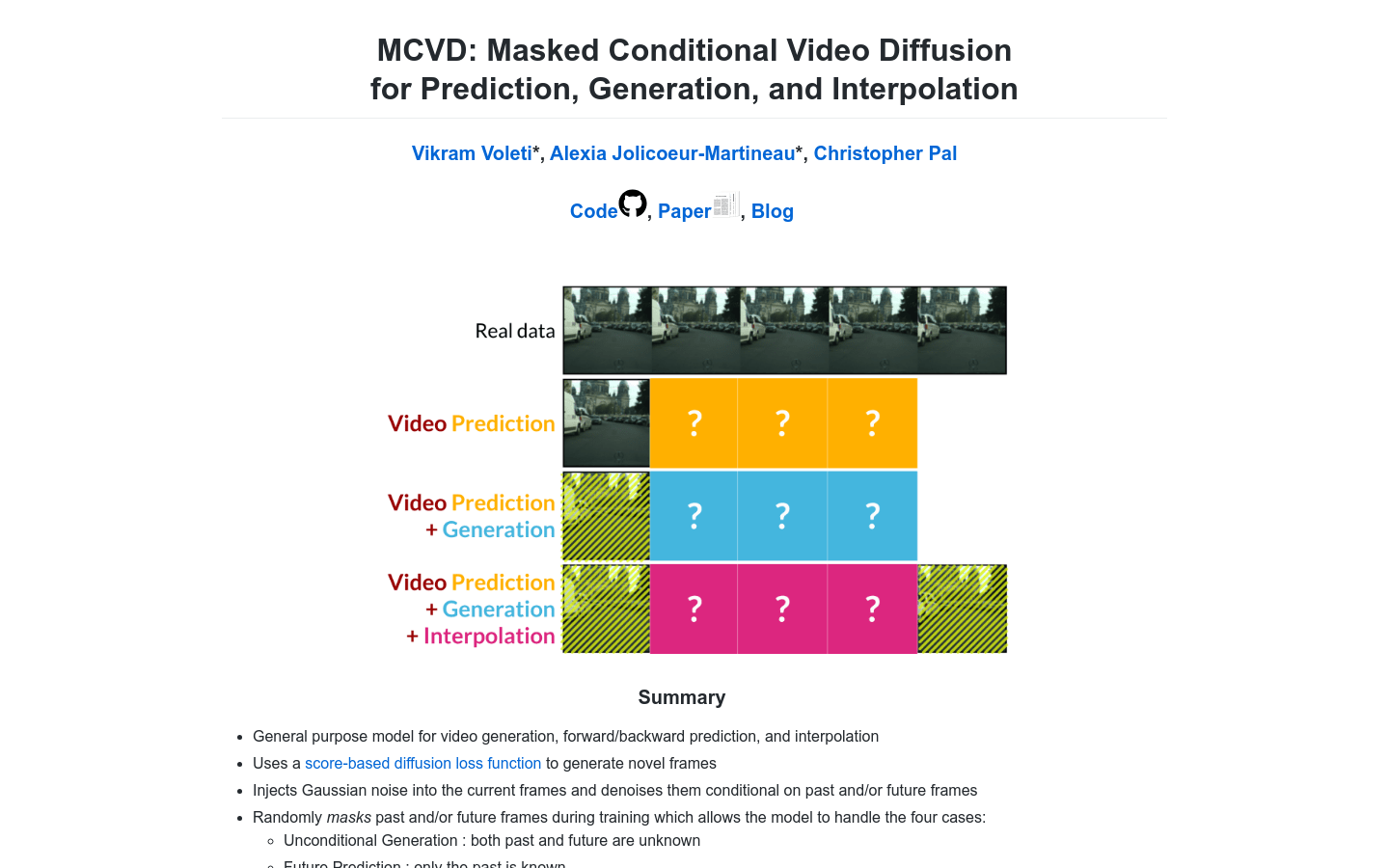

The MCVD model represents a versatile framework designed for video generation, prediction, and interpolation tasks. It leverages a score-based diffusion loss function to create new frames by introducing Gaussian noise into the current frame while incorporating past and/or future frames during the denoising process. During training, the model randomly masks past and/or future frames to develop four key capabilities: unconditional generation, future prediction, past reconstruction, and interpolation. The architecture of MCVD employs a 2D convolutional U-Net structure that effectively conditions on past and future frames using either concatenated or spatiotemporal adaptive normalization techniques. This approach ensures the production of high-quality and personalized video samples. Notably, the model is efficient enough to run on 1-4 GPUs but can be scaled up for larger-scale operations. As a simple non-recursive 2D convolutional design, MCVD stands out by generating videos of arbitrary lengths while achieving state-of-the-art (SOTA) performance in its domain.

Target Users

The primary users and applications of the MCVD model are focused on three main areas: video generation, prediction, and interpolation. These functionalities make it particularly valuable for creative industries, technical simulations, and dynamic content creation where temporal coherence and high visual quality are paramount.

Use Cases

- Movie Effect Generation: MCVD can be employed to generate complex visual effects in movies by creating intermediate frames or altering existing sequences with realistic textures and movements.

- Video Game Development: The model assists game developers in generating high-quality animations, predicting in-game scenarios, and interpolating smooth transitions between game states for enhanced player experiences.

- Animation Production: MCVD streamlines the animation process by automatically generating missing frames, predicting future character movements, or creating seamless interpolations between keyframes, significantly improving production efficiency.

Features

The MCVD model offers a comprehensive suite of features tailored to video manipulation tasks:

- Video Generation: The ability to synthesize entirely new videos from scratch or based on given inputs, enabling creative freedom in content creation.

- Video Prediction: Accurately forecasting future frames in a video sequence, which is crucial for applications like predictive analytics and autonomous systems.

- Video Interpolation: Inserting additional frames between existing ones to smooth out transitions or enhance visual fidelity, making it indispensable for refining low-quality videos.

This structured approach ensures that MCVD remains a robust and adaptable tool for various video-related applications, setting new benchmarks in the field of generative models.