Megatron LM

Megatron LM:Research on Training Transformer Model at Scale

Tags:AI modelAI model Deep Learning language model Open Source Standard Picks TransformerOverview of Megatron-LM

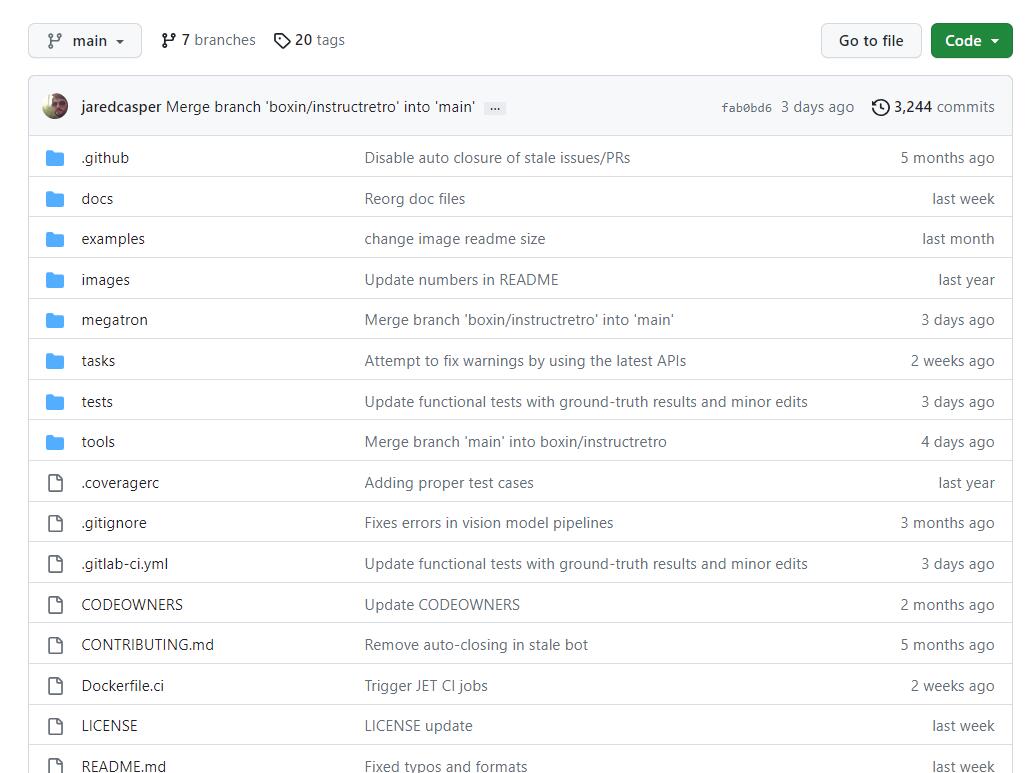

Megatron-LM represents a cutting-edge large-scale Transformer model developed by NVIDIA’s Applied Deep Learning Research team. This innovative framework focuses on advancing research in the training of massive Transformer language models. By leveraging mixed precision techniques, efficient implementation of both model parallelism and data parallelism, along with advanced pre-training methodologies for multi-node distributed training, Megatron-LM enables effective development of state-of-the-art language models such as GPT, BERT, and T5.

Target Audience

Megatron-LM is specifically designed for researchers and practitioners involved in the challenging domain of large-scale language model training. This includes academic researchers, software developers, data scientists, and AI engineers who are working on cutting-edge projects in natural language processing (NLP) and machine learning.

Key Features

- High-Efficiency Large-Scale Model Training: Megatron-LM provides optimized capabilities to train extremely large neural networks efficiently, making it possible to handle models with billions of parameters.

- Distributed Training Support: The framework effectively supports both model parallelism and data parallelism strategies. This dual approach allows for scaling out training across multiple GPU nodes, maximizing computational resources utilization.

- Built-In Transformer Model Compatibility: Megatron-LM natively supports popular Transformer architectures such as GPT, BERT, and T5, facilitating rapid experimentation and deployment of these models within the framework.

This structure provides a comprehensive overview of Megatron-LM’s capabilities while maintaining clarity and readability. Each section is designed to guide users through understanding its purpose, target audience, and technical features in an organized manner.