MLX

MLX:'Machine Learning Efficiently & Flexibly on Apple Silicon'

Tags:AI development assistantAI development assistant AI model Apple silicon Machine Learning NumPy Open Source PyTorch Standard PicksIntroduction to MLX: A Modern Machine Learning Framework for Apple Silicon

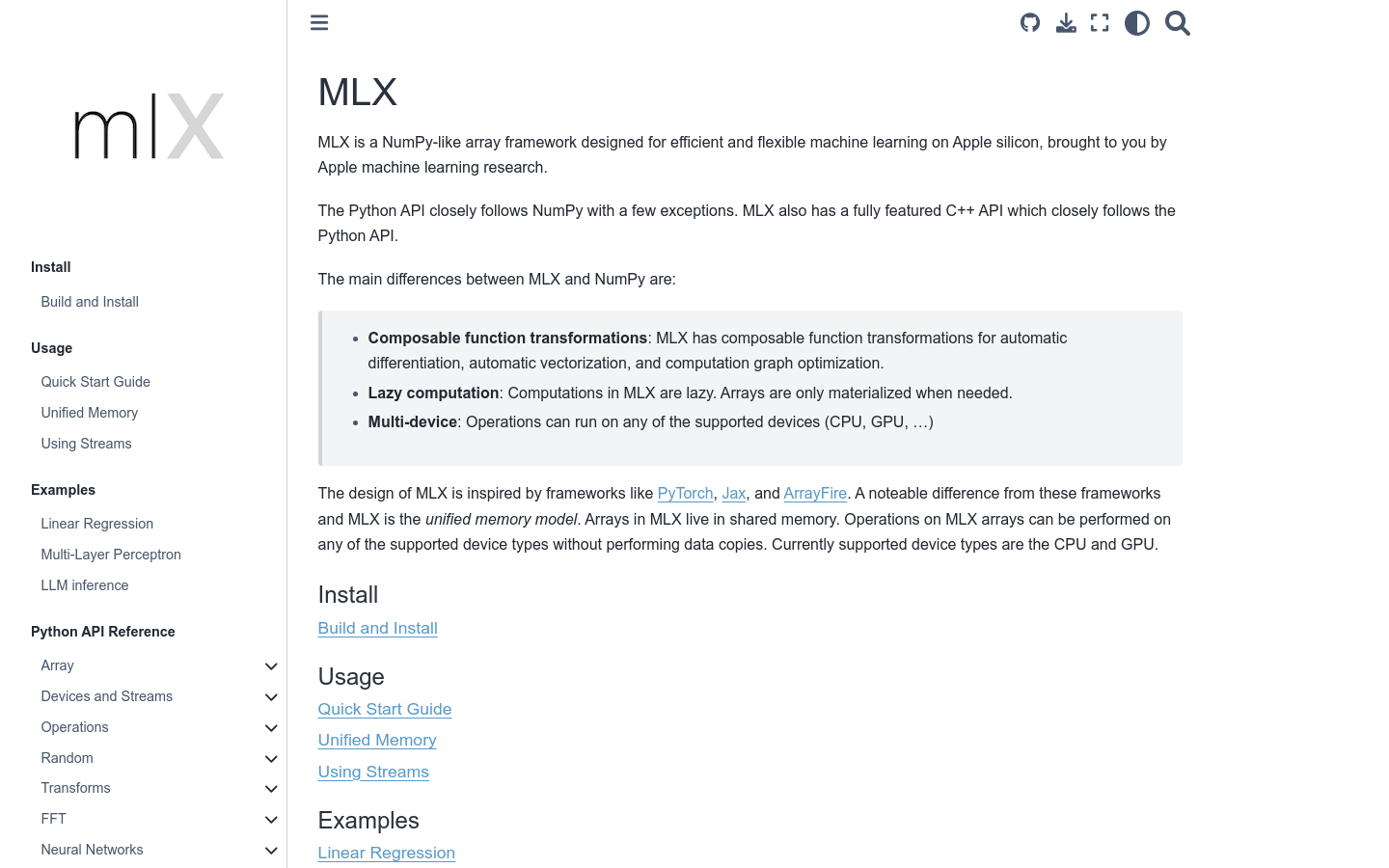

MLX is a cutting-edge array processing framework designed specifically for efficient machine learning computations on Apple silicon. Developed by the Apple Machine Learning Research team, MLX offers a familiar yet powerful interface that closely mirrors NumPy while introducing several key innovations tailored for modern hardware.

Key Features and Innovations

MLX introduces several unique features that set it apart from traditional frameworks:

- Unified Memory Model: Unlike other frameworks, MLX employs a unified memory model where arrays reside in shared memory. This design enables seamless operations across various device types (CPU, GPU, etc.) without the need for data duplication or transfer.

- Composable Function Transforms: MLX allows users to create and combine function transforms in a flexible manner, making it easier to build complex machine learning workflows.

- Lazy Computation: Operations in MLX are deferred until explicitly executed. This lazy evaluation approach optimizes performance by minimizing unnecessary computations.

- Multi-Device Support: MLX seamlessly supports operations across multiple devices, enabling efficient resource utilization and scalability.

Design Philosophy and Inspiration

While drawing inspiration from established frameworks like PyTorch, Jax, and ArrayFire, MLX introduces a unique approach through its unified memory model. This departure from traditional frameworks allows for more efficient data management and faster execution times, particularly on Apple silicon.

Target Audience and Use Cases

MLX is designed with machine learning practitioners in mind, offering them a powerful toolset for building and deploying models efficiently on Apple hardware. Its versatility makes it suitable for a wide range of applications:

Common Applications of MLX

- Linear Regression: Implement basic linear regression models with ease.

- Multi-Layer Perceptron Operations: Build and train neural networks using the framework’s flexible array operations.

- Large Language Model Inference: Perform efficient inference on large language models leveraging MLX’s optimized hardware support.

Why Choose MLX?

For developers and researchers working with Apple silicon, MLX provides a unique combination of flexibility, efficiency, and performance. Its tight integration with Python and support for both high-level operations and low-level optimizations make it an ideal choice for demanding machine learning workloads.