Moe 8x7B

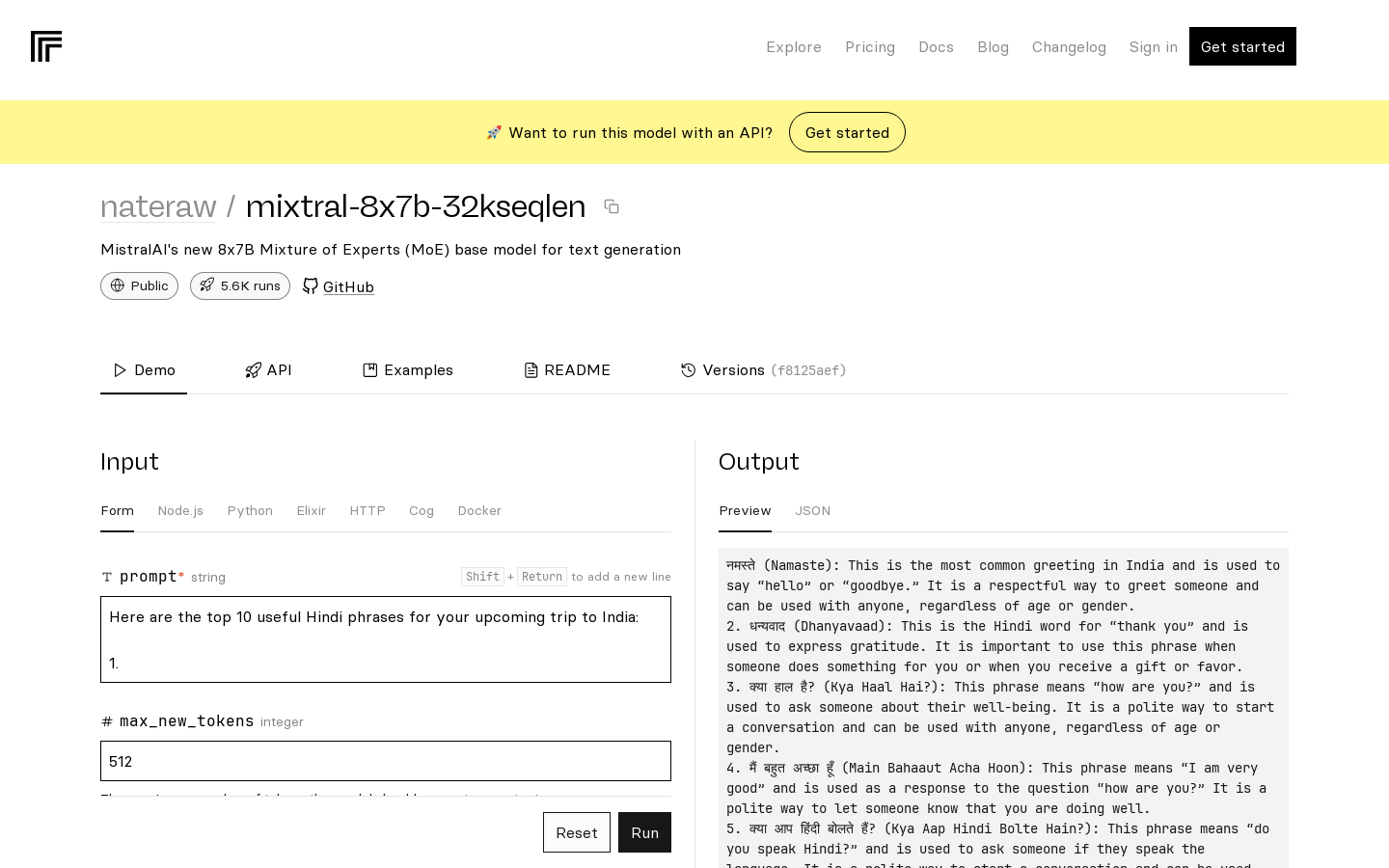

Moe 8x7B:MistralAI's New 8x7B Mixed-Expert Model for Text Generation

Tags:AI modelAI model AI text generation Mixed Expert Model Paid Standard Picks Text GenerationIntroducing MistralAI’s 8x7B Mixed-Expert Model for Text Generation

MistralAI is excited to announce the release of its cutting-edge 8x7B mixed-expert (MoE) base model designed specifically for high-quality text generation tasks. This innovative model leverages a unique architecture that combines the strengths of expert networks to deliver superior performance in generating coherent and contextually relevant text.

Key Highlights

This state-of-the-art model is built on MistralAI’s advanced mixed-expert (MoE) architecture, which optimizes both accuracy and computational efficiency. The 8x7B parameter count represents a significant leap forward in model capacity while maintaining the benefits of the MoE design:

- High-Quality Text Generation: Delivers natural and contextually appropriate text outputs across various domains.

- Scalability: Designed to handle a wide range of text generation tasks, from creative writing to technical documentation.

- Efficient Resource Utilization: The MoE architecture ensures optimal use of computational resources while maintaining high performance standards.

Pricing and Accessibility

MistralAI has implemented a flexible pricing model based on usage metrics to ensure cost-effectiveness for our diverse customer base. We encourage users to review the official documentation for detailed pricing tiers and service terms. Our goal is to make this powerful technology accessible to both individual developers and enterprise-level applications.

Target Applications

The 8x7B MoE model is versatile enough to be applied across multiple text generation domains, including:

- Content Creation: Article writing, blog posts, marketing copy, and technical documentation.

- Creative Writing: Fiction novels, poetry, dialogue scripts for games or films.

- Summarization: Automatically generating concise summaries from long-form text.

- Dialog Systems: Building intelligent conversation agents for customer support, virtual assistants, and interactive applications.

Technical Capabilities

Beyond its core functionality, the 8x7B model offers several technical advantages that make it a preferred choice for developers:

- Parameter Efficiency: The MoE architecture allows for better parameter utilization compared to traditional transformer models.

- Parallel Processing: Enhanced ability to handle large-scale text generation tasks efficiently.

- Adaptability: Fine-tuning capabilities to adapt the model for specific use cases and industries.

MistralAI is committed to pushing the boundaries of AI technology, and we believe this new model represents a significant step forward in text generation capabilities. Whether you’re an individual developer or part of a large organization, the 8x7B MoE model offers unmatched potential for innovation across various applications.