Momask

Momask:3D Human Motion

Tags:AI model3D Human Motion AI 3D Tools AI model Open Source Standard Picks Temporal Repair Text-DrivenIntroduction to MoMask: A Text-Driven 3D Human Motion Generation Model

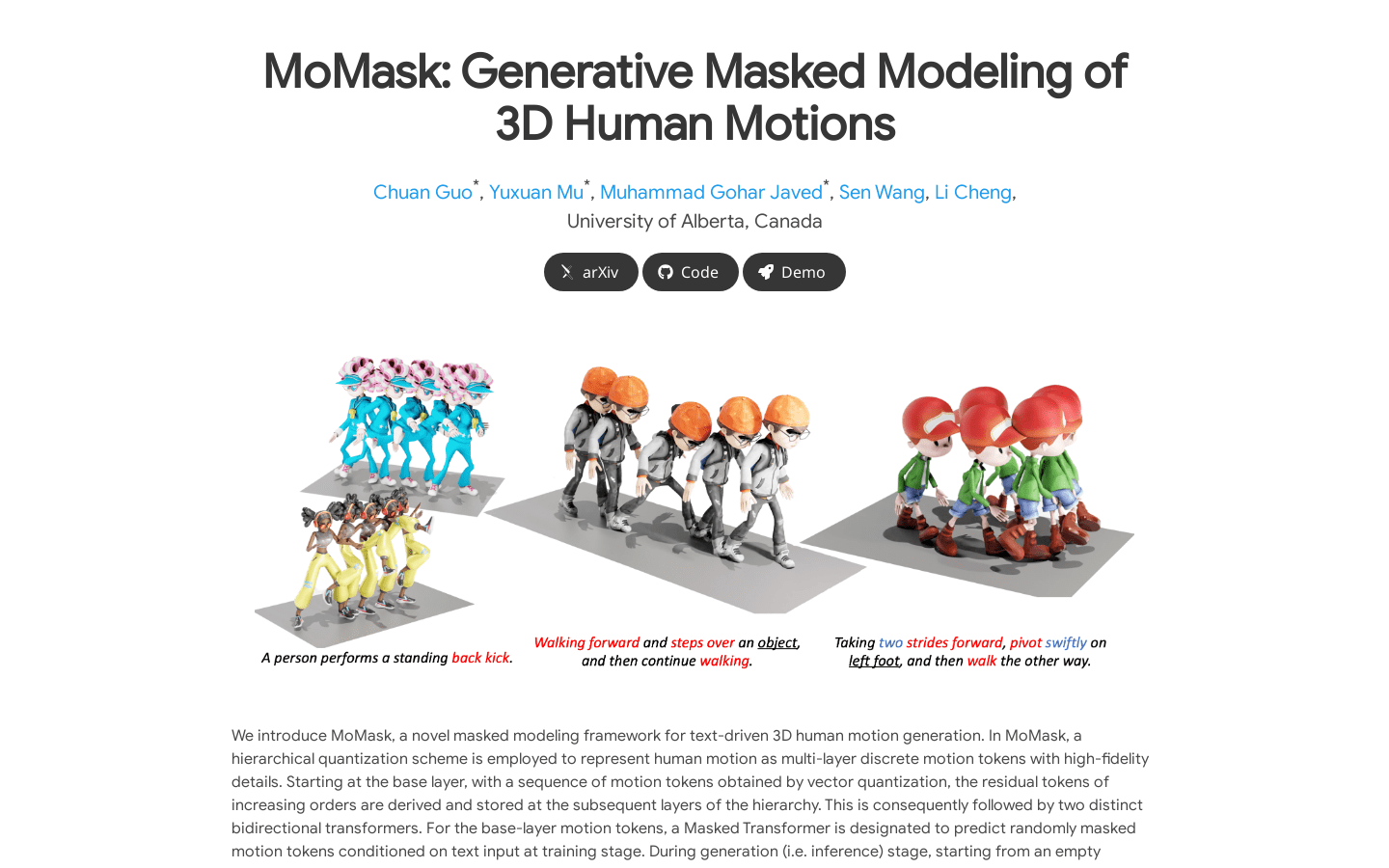

MoMask represents a groundbreaking advancement in the field of text-to-3D-motion generation. This innovative model leverages a sophisticated hierarchical quantization approach to encode human motion into multi-layered discrete tokens, ensuring the preservation of intricate details while maintaining high fidelity.

Architecture and Functionality

The core mechanism of MoMask involves two distinct yet complementary bidirectional Transformer networks that work synergistically to predict motion tokens directly from text inputs. This dual-Transformer architecture not only enhances the model’s generative capabilities but also ensures superior performance across a range of text-to-motion generation tasks.

Performance and Applications

Extensive testing has demonstrated that MoMask significantly outperforms existing methods in generating coherent and realistic 3D human motion sequences. Beyond its primary function, the model exhibits remarkable versatility, seamlessly extending to related applications such as:

- Text-guided temporal repair: The ability to修复损坏或不完整的动作序列,使其恢复连贯性和自然性。

- Dynamic motion synthesis: Generating complex and nuanced movements based on textual descriptions.

Key Features of MoMask

- Text-based 3D Human Motion Generation: Converts textual inputs into detailed, lifelike 3D human motions with exceptional accuracy.

- Hierarchical Quantization Scheme: Utilizes a multi-level quantization approach to preserve motion details at various scales, ensuring both efficiency and precision.

- Generalizability Across Tasks: Beyond its primary function in text-to-motion generation, MoMask demonstrates exceptional adaptability in related domains such as temporal repair and dynamic sequence prediction.

MoMask stands out as a powerful tool for researchers and developers seeking to integrate advanced 3D motion capabilities into their applications. Its unique combination of cutting-edge neural architectures and innovative quantization techniques makes it a benchmark in the field of generative models.