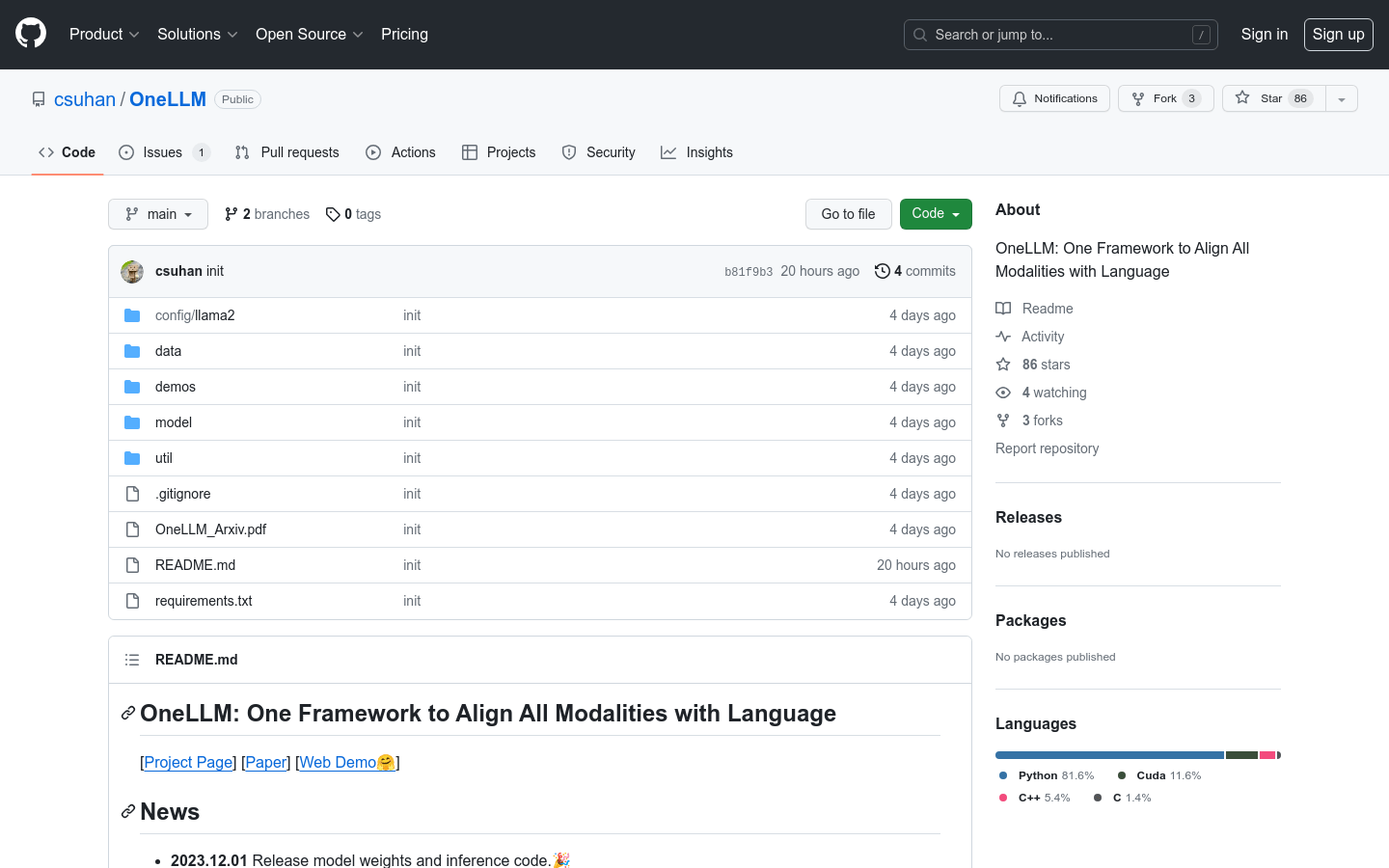

Onellm

Onellm:A Framework for Unified Language Modalities

Tags:AI modelAI development assistant AI model Image Processing Multimodal Open Source Standard Picks Text ProcessingIntroduction to OneLLM: A Unified Framework for Multimodal Processing

OneLLM represents a groundbreaking framework designed to revolutionize the way we handle multiple language modalities. This innovative tool provides users with preview models and enables seamless local demonstrations, making it an invaluable resource for developers and researchers alike.

Key Features of OneLLM

Model Installation: OneLLM simplifies the process of installing various models, allowing users to easily integrate different language processing tools into their workflows.

Preview Models: The platform offers a range of preview models that users can experiment with, providing a hands-on approach to testing and development.

Local Demonstrations: OneLLM’s ability to run demonstrations locally makes it highly accessible, eliminating the need for external servers or cloud infrastructure.

Target Audience

OneLLM is specifically tailored for professionals dealing with complex multimodal tasks. Its primary users include:

- Researchers exploring cutting-edge language processing techniques

- Developers creating sophisticated natural language applications

- Engineers working on large-scale multilingual projects

Practical Applications of OneLLM

OneLLM’s versatility extends across various domains:

- Image Annotation: Streamline the process of annotating images by integrating text processing capabilities directly into your workflow.

- Video Description Generation: Enhance video analysis by combining visual and textual data, creating more comprehensive and accurate descriptions.

- Speech Recognition & Text Processing: Simplify the integration of speech-to-text technologies with OneLLM’s unified processing capabilities.

In summary, OneLLM serves as a powerful bridge between different language modalities, making complex tasks more manageable and efficient. Its user-friendly design and robust features make it an essential tool for anyone working in the field of natural language processing.