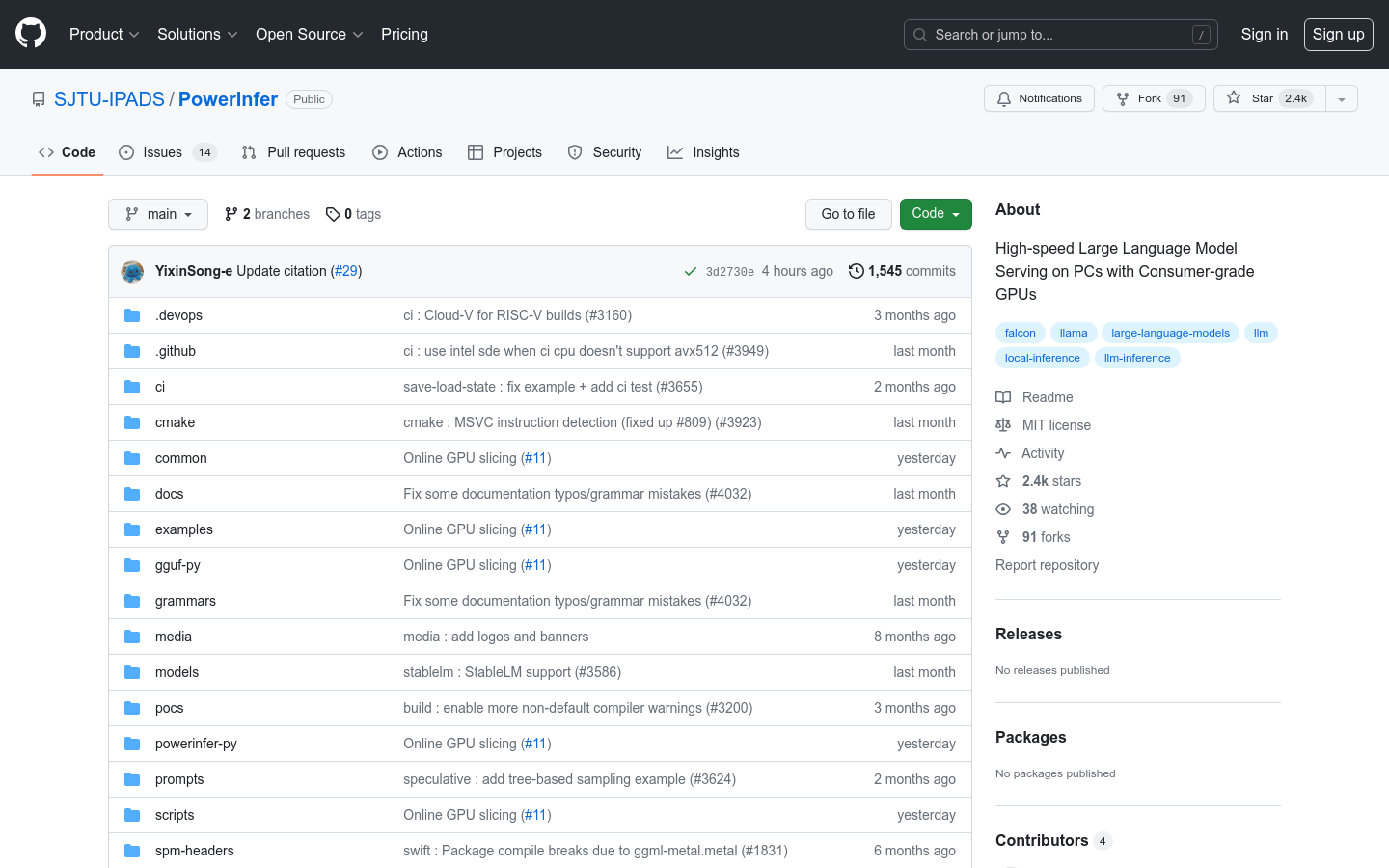

Powerinfer

Powerinfer:Fast LLM Local I

Tags:AI modelAI model AI model inference and training Consumer GPU Inference Engine language model Open Source Standard PicksIntroduction to PowerInfer

PowerInfer is a cutting-edge engine designed for high-speed inference of large language models (LLMs) on consumer-grade GPUs within personal computers. By leveraging the inherent locality of LLM inference, PowerInfer pre-loads hot-activated neurons directly onto the GPU, thereby significantly minimizing GPU memory usage and reducing data transfer between CPU and GPU. The platform also incorporates advanced adaptive predictors and neuron-aware sparse operators, which further enhance the efficiency of neuron activation and sparse computation.

With impressive performance metrics, PowerInfer achieves an average generation speed of 13.20 tokens per second on a single NVIDIA RTX 4090 GPU, making it only 18% slower than top-tier server-grade A100 GPUs while maintaining the same level of model accuracy.

Target Audience

PowerInfer is specifically tailored for users seeking to perform high-speed inference on large language models during local deployments. This solution is ideal for developers, researchers, and enthusiasts who want to leverage powerful LLMs without relying on expensive server infrastructure.

Key Features of PowerInfer

- Efficient Inference Through Sparse Activation and Neuron Partitioning: PowerInfer employs a unique approach by utilizing sparse activation techniques and categorizing neurons into ‘hot’ or ‘cold’ states. This method optimizes memory usage and processing speed, ensuring efficient operation on consumer-grade hardware.

- Seamless Integration of CPU-GPU Resources: The platform effectively balances the workload between CPU and GPU, utilizing their respective strengths to achieve faster processing speeds while maintaining optimal resource utilization.

- Compatibility with ReLU Sparse Models: PowerInfer is fully compatible with common ReLU-based sparse models, ensuring seamless integration with a wide range of existing model architectures.

- Optimized for Local Deployments: Designed specifically for local environments, PowerInfer delivers low-latency LLM inference and serves as a reliable solution for on-premise implementations.

- Backward Compatibility: While primarily optimized for modern GPU setups, PowerInfer also supports inference with the same model weights as llama.cpp, though without the performance improvements exclusive to its enhanced architecture.

PowerInfer represents a significant leap forward in making powerful LLMs accessible to everyday users, bridging the gap between server-grade performance and consumer hardware capabilities.