Reconfusion

Reconfusion:Diffusion-Based 3D Reconstruction

Tags:AI image generation3D Reconstruction AI 3D Tools AI image generation Diffusion Prior Geometry Synthesis Image Synthesis Open Source Standard PicksIntroduction

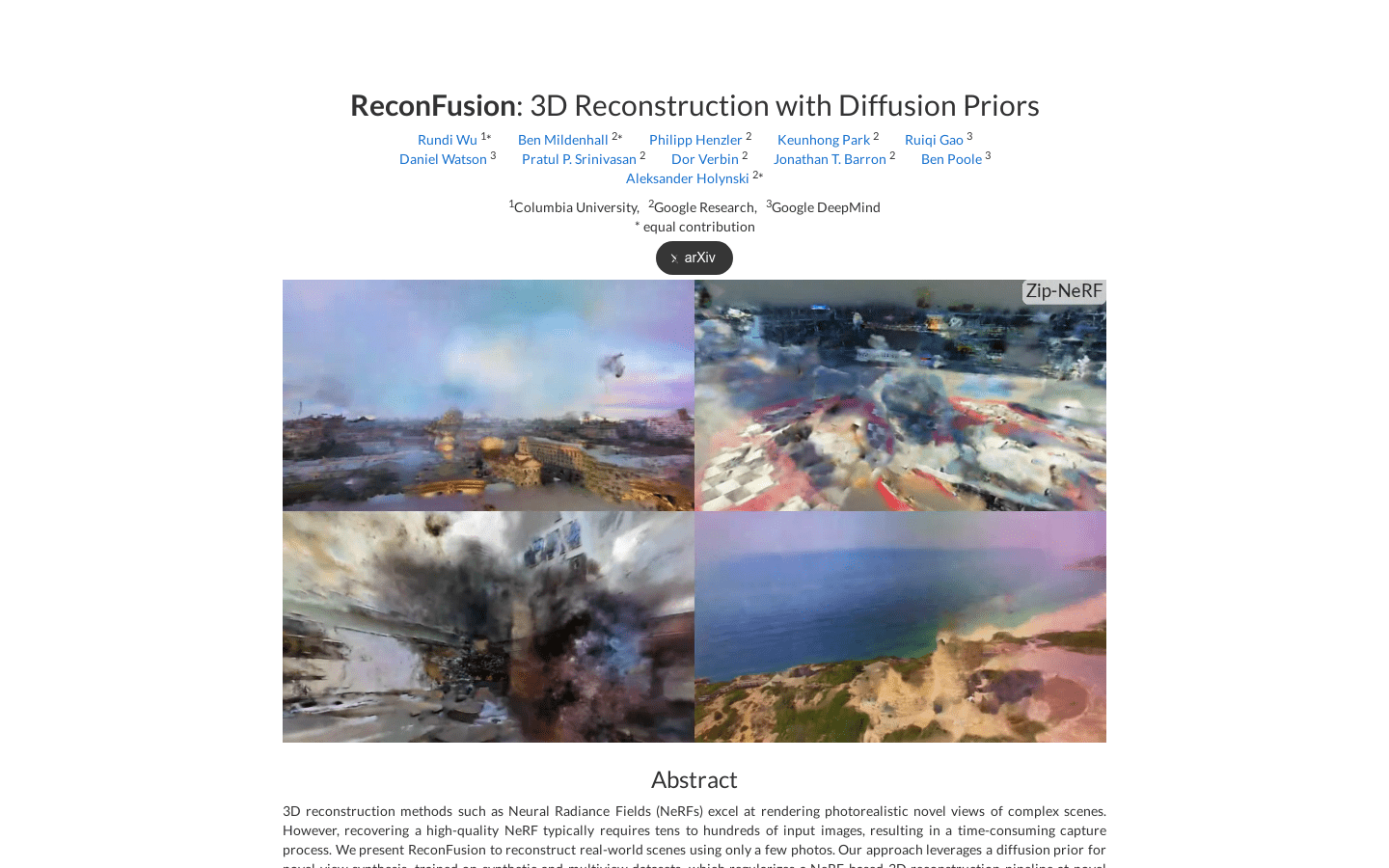

The ReconFusion framework represents an innovative approach to 3D scene reconstruction, utilizing diffusion-based priors to create realistic models from limited photographic input. By integrating Neural Radiance Fields (NeRFs) with advanced diffusion techniques, this method enables the generation of highly accurate geometry and textures for regions beyond the captured viewpoints. The system is designed to operate effectively across both limited-view and multi-view datasets during training, ensuring robust performance in unconstrained areas while maintaining consistency with observed features.

Comprehensive testing has been conducted on diverse real-world datasets, including challenging scenarios such as forward-facing and fully spherical (360-degree) environments. These evaluations have consistently demonstrated significant improvements in reconstruction accuracy and visual fidelity compared to existing methods.

Target Audience

ReconFusion is particularly well-suited for applications where 3D reconstruction must be achieved from a limited number of viewpoints or perspectives. Its ability to extrapolate realistic geometric and textural information into unobserved regions makes it an ideal solution for scenarios requiring high-quality 3D models without extensive data capture.

Applications

Application 1: Medical Imaging – ReconFusion can be employed to construct detailed 3D models of human organs based on a minimal number of imaging viewpoints. This capability holds significant potential for enhancing diagnostic accuracy and reducing the need for extensive patient exposure to imaging procedures.

Application 2: Architectural Design – The method is highly effective in generating realistic digital representations of buildings and complex structures from limited-angle photography. This can greatly accelerate the design process by providing accurate spatial information with minimal input data.

Application 3: Virtual Reality (VR) Development – ReconFusion enables the creation of immersive virtual environments using just a few high-quality input images. This approach significantly reduces the resources required for VR content generation while maintaining visual realism and detail.

Key Features

NeRF-Based Optimization: The framework employs NeRF to minimize reconstruction loss and optimize sample generation, ensuring accurate modeling of scene geometry and appearance.

PixelNeRF-Style Image Synthesis: Utilizes a PixelNeRF-inspired architecture to generate high-resolution sample images, providing detailed textural information for reconstructed scenes.

Diffusion Model Integration: Combines noise-based latent variables with advanced diffusion models to refine and decode output samples, resulting in more realistic and accurate 3D reconstructions.