Video LLaVA

Video LLaVA:Joint Visual Representations via Prefix Projection Alignment

Tags:AI video searchAI video generation AI video search Machine Learning Paid Standard Picks Video Processing Visual UnderstandingOverview

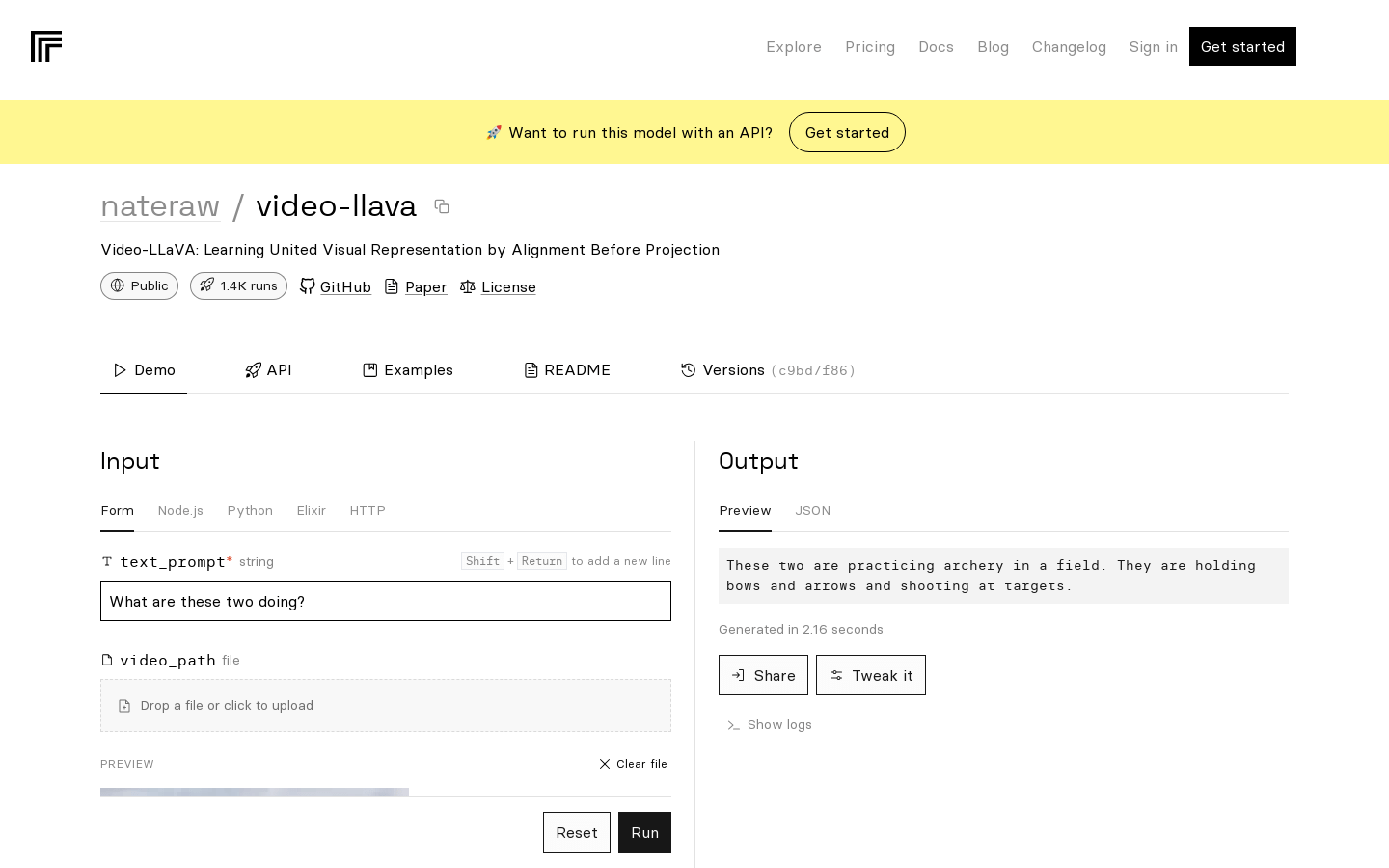

The Video-LLaVA model is designed to learn joint visual representations through training using prefix projection alignment. This innovative approach enables the model to effectively align video and image representations, thereby enhancing its capability for visual understanding. Furthermore, the system demonstrates exceptional efficiency in both learning and inference processes, making it highly suitable for a wide range of video processing tasks as well as various visual applications.

Target Users

The Video-LLaVA model is specifically tailored for professionals engaged in:

- Video Processing: Including but not limited to video analysis, editing, and optimization.

- Visual Tasks: Such as image recognition, computer vision research, and related fields.

Use Cases

The Video-LLaVA model can be effectively applied in the following scenarios:

- Video Classification: Accurately categorizing videos into predefined classes for efficient content management.

- Image Retrieval: Enhancing search capabilities by retrieving relevant images based on visual features.

- Object Tracking: Precisely tracking objects within video sequences to aid in surveillance, robotics, and more.

Features

The Video-LLaVA model is characterized by the following key attributes:

- Learning Joint Visual Representations: The ability to integrate and represent visual data from multiple sources cohesively.

- Prefix Projection Alignment: A unique method for aligning video and image representations to improve understanding and processing efficiency.

- Efficient Learning and Inference Speed: Optimized algorithms ensure rapid training and real-time inference, making it ideal for time-sensitive applications.

This comprehensive overview highlights the capabilities and potential applications of the Video-LLaVA model in advancing video processing and visual tasks. Its unique features position it as a powerful tool for researchers and practitioners in the field of computer vision and related disciplines.