Vividtalk

Vividtalk:'Create Realistic Lip-Sync Raps'

Tags:AI avatar generationAI avatar generation AI video generation Audio-driven Avatar Generation Image Animation Open Source Standard Picks Video synthesisIntroduction to VividTalk

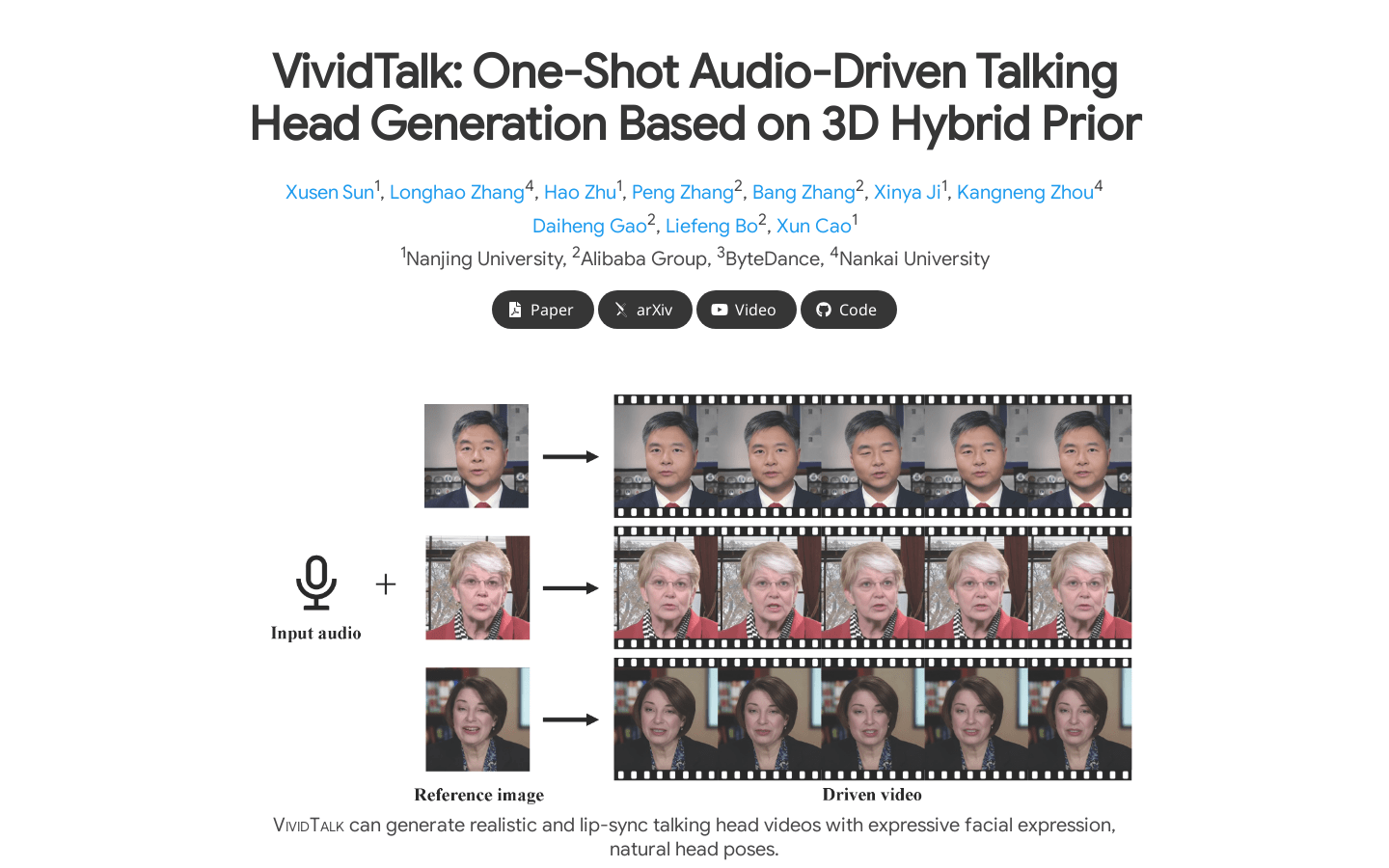

VividTalk represents a groundbreaking one-shot audio-driven avatar creation method based on 3D mixed prior techniques. This innovative approach enables the generation of realistic rap videos featuring rich emotional expressions, natural head movements, and precise lip synchronization. The system operates through a two-stage framework designed to produce high-quality output with all these attributes seamlessly integrated.

In its first stage, VividTalk processes audio input by mapping it to a 3D mesh structure. This involves capturing two distinct types of motion:

- Facial Motion: A mixed shape and vertex representation is employed as an intermediate step, enhancing the model’s capacity to represent complex facial movements accurately.

- Head Motion: A novel learnable head posebook has been developed alongside a two-stage training mechanism to ensure smooth and natural head movements.

In the second stage, VividTalk employs a dual-branch motion VAE (Variational Autoencoder) combined with a generator network. This setup transforms the initial mesh into dense motion data and subsequently generates high-quality video frames one by one.

Performance Validation

Comprehensive experiments have shown that VividTalk excels in producing rap videos with exceptional lip synchronization and realism. When compared to existing state-of-the-art methods, it achieves superior results both objectively (through metrics) and subjectively (via human evaluation). The system demonstrates strong performance across key metrics including:

- Lip sync accuracy

- Naturalness of head movements

- Identity preservation in avatars

- Overall video quality

Target Audience and Applications

Primary Users

VividTalk is designed for:

- Content creators seeking to produce engaging rap videos.

- Marketing professionals aiming to create dynamic promotional content.

- Researchers in the field of computer graphics and animation.

Potential Use Cases

- Virtual Host Production: Generate expressive avatars for live streaming, virtual events, or interactive media.

- Cartoon-Style Animations: Create charming animated characters driven by audio inputs for entertainment content.

- Multilingual Content Creation: Produce avatar-driven videos in multiple languages for global audiences.

Key Features

- Realistic Video Generation:Produces high-quality rap videos with lifelike expressions and natural movements.

- Diverse Style Support:Facial animations can be styled in various ways, including realistic human-like appearances or cartoonish characters.

- Multilingual Compatibility:Works effectively across different languages to meet global content demands.

By addressing critical challenges in audio-driven avatar generation, VividTalk sets a new standard for creating engaging and realistic video content. Its modular architecture and innovative techniques make it a powerful tool for both research and practical applications.